Blog: Data & analytics

Flying in the face of privacy concerns?

19 July 2023 minute read

If you haven’t yet heard from an event technology company extolling the virtues of using facial recognition at your events, you’re one of the lucky few.

Companies specialising in Automatic Facial Recognition (AFR) software have been springing up all over the place during the past five years or so. Their primary markets are, of course, dictatorships, law-enforcement, airports, retail outlets, the list goes on … But plenty of them would like to sell their software to you with the promise that it can make your events more efficient.

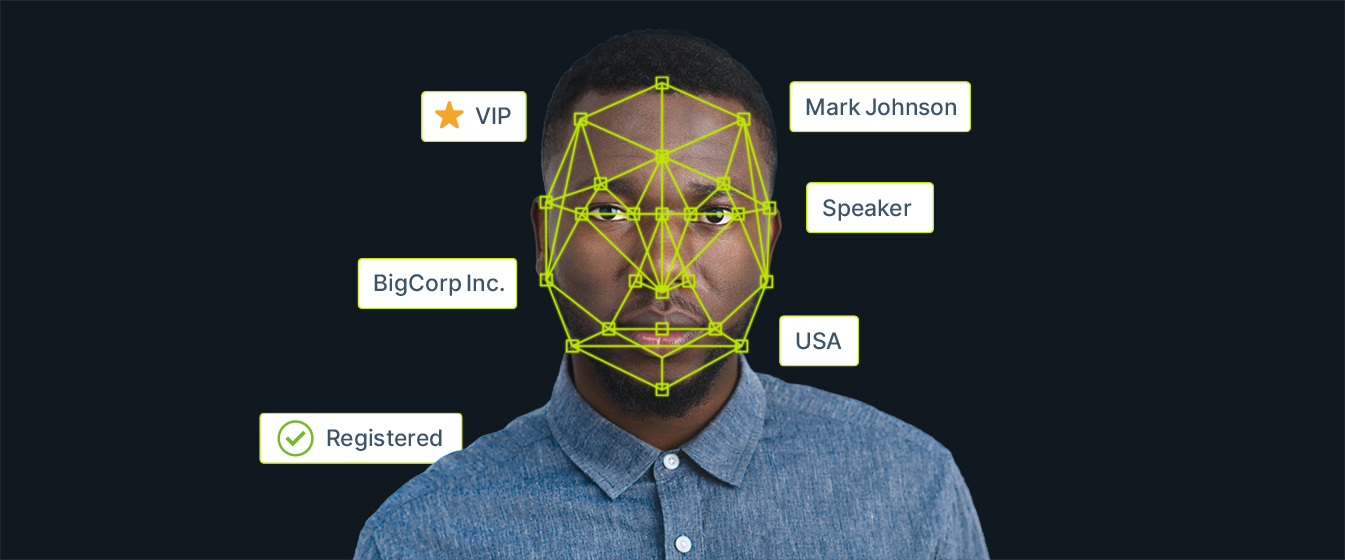

AFR is essentially the process of identifying or verifying a person’s identity using their face. It captures, analyses and compares patterns based on the person’s unique facial details. As such it entails the collection and storage of highly sensitive biometric personal data.

The supposed benefits of AFR for event organisers break down into two categories which we’ll call ‘creepy’ and ‘really creepy’!

The first category is the most well-known, and it relates to quick identification for check-in.

The idea is that you can offer a faster, effortless experience to your attendees by having them upload a photo at registration, then simply breeze through the door of your event on the day – no queues, no need to speak to anyone. This is meant to save you time and staff, increase security and make everyone happy. We’ll come back to the plausibility of all that in a moment.

The second category relates to so-called Facial Emotion Recognition (FER).

The latest FER tech can be used to scan participants’ faces – during sessions or networking breaks – and to then gauge (albeit crudely) their satisfaction at a given point using emotional recognition algorithms.

Developers claim they can map facial expressions to identify emotions such as disgust, joy, anger, surprise, fear, or sadness - on a human face with image processing software.

Stop! You had me at ‘disgust’!

For example, you might track the reaction of a crowd to various points during a speaker’s presentation and measure how engaged they were.

Some vendors even go so far as to claim that facial recognition tech can eliminate the need for post-event feedback. Why ask people what they thought of your event – when you can get cameras to track their movements and expressions, and then decide for yourself how satisfied they were using emotional scoring technology!

I mean, that sounds both highly reliable and in no way dystopian!

If you think this would make a large percentage of your attendees feel deeply uncomfortable, you’re almost certainly right. And even if didn’t, legislators are already gearing up to pull the plug.

People just don’t like it

Polling throughout Europe and North America indicates widespread hostility and distrust of facial recognition software, especially when deployed by private businesses.

There are major ethical and privacy concerns around facial recognition tech, and for pretty good reasons.

A US federal study released in 2021 found that facial recognition systems misidentified people of colour more often than white people. The American Civil Liberties Union went so far as to call the technology racist.

Even for caucasian men, the technology often fails to work. In the UK, police testing a facial recognition system saw 91% of matches labelled as false positives, as the system made 2,451 incorrect identifications and only 234 correct ones when matching a face to a name on a watchlist.

Facial recognition tools work a lot better in the lab than they do in the real world. Small factors such as light, shade and how the image is captured can affect the result.

Meanwhile, privacy concerns centre on the collection of intimate personal data that cannot be altered after a potential data leak. After all, your face can’t be changed if a database holding biometric data is hacked or sold to somebody with poor security infrastructure, and yours is out there.

Put simply, a lot of people don’t trust this stuff. You might find your attendees would rather scan a QR code rather than themselves when checking into your event.

Then there’s the law

The EU General Data Protection Regulation (GDPR) came into force five years ago and forever changed how event organisers manage data. Now the Commission is working on AI regulation which will likely change how the latest technologies can be used. Or rather, not used. The new proposed regulation puts real-time and remote biometric identification systems, such as facial recognition, in a category of unacceptable risk to citizens that will be banned.

Back in May this year, the organisers of the Mobile World Congress in Barcelona were fined €200,000 ($220,000) by Spain’s data protection authority (DPA) in what constitutes the first major sanction of a business event for using facial recognition tech in breach of the GDPR statutes.

Ironically enough, the fine resulted from a formal complaint lodged by a speaker who specialised in data privacy issues. Dr Anastasia Dedyukhina, founder of Consciously Digital and author of Homo Distractus: Fight for your Choices and Identity in the Digital Age, detailed what led her to make the complaint in a LinkedIn post.

She was asked to verify her identity by uploading her passport online and refused because she could not see a good reason for doing so. According to Dedyukhina, the organisers did not accept any other method of identity verification.

It appears that access to the event and identity verification was not carried out in a GDPR-compliant way. Forcing 20,000 participants to upload sensitive private information (in this case passport scans) was unlawful.

One of the main reasons behind the fine was the apparent forced consent. There were also concerns about where the data was transmitted to and how it was stored and used. For the facial recognition system, GSMA partnered with a company called ScanVis. ScanVis is a subsidiary of Comba, a Chinese telecom company specialising in installing mobile networks at venues and for events. It is headquartered in Guangzhou and listed on the Hong Kong stock exchange.

Of course, it’s perfectly possible to operate a facial recognition-based check-in process that would be GDPR-compliant. For example, some systems on the market create a biometric profile based on a photo uploaded at registration. The system only stores the profile linked to a customer ID, as the photo itself is instantly deleted after image processing. The fact that there’s no link between the personal data and the biometric profile means the system is more likely to be compliant with GDPR.

Even so, a majority within the European Parliament is now in favour of an outright ban on facial recognition tech that scans crowds indiscriminately and in real-time.

GDPR only applies to EU citizens, regardless of where they are in the world. Meanwhile, stronger privacy laws are being enacted across the US. Indiana recently became the seventh state to enact a comprehensive data privacy law including tight restrictions on the collection and use of biometric data.

Yeah, but it would be really useful at my event!

Would it though?

Companies pushing AFR technology for events often tell us that it helps make check-in ‘fast’ and ‘effortless’. But hang on.

Your attendee still has to check in somehow, right? Is it that much faster or more convenient to scan a QR on your confirmation email or on your badge, than it is to have your face scanned?

You can bet the QR code will scan faster (and more reliably) than even the best AFR. How many times have you had to take off / put on your glasses to open your iPhone?

Of course the other advantage touted is security. But again, how much does it really get you? And how much do you need?

Without AFR, you can still have an attendee upload a photo at registration, visually confirm that the photo matches the person at check-in, and add that photo to their badge if you’re worried about substitutions. If your event is really sensitive, you’re still going to need plenty of professional security to check robust forms of personal ID and search bags etc anyway.

I mean, maybe if you’re organising a NATO summit it would be worth considering? Maybe?

Perhaps I’m a dinosaur, but …

Remember the scene in the first Jurassic Park movie, when Jeff Goldblum’s character tells theme park boss and velociraptor-botherer, John Hammond:

‘Your scientists were so preoccupied with whether or not they could that they didn’t stop to think if they should.’

That, I fear, is facial recognition for events. An ethically sketchy technology in full-blooded pursuit of a fresh business case.

I worry that some organisers will adopt ARF because they think it’s really cool. Meanwhile the majority of their attendees will be anything but cool with it.

And anyway, even if AFR was a short-cut to greater efficiency and security (and even it worked really well), it would still be a slippery slope.

The more we use facial recognition in benign contexts like event check-in, the less we start to think about it, the more accustomed to it we become, and the less we concern ourselves about the risks it poses to our civil liberties.

It trains us to be comfortable with having our face scanned. And we shouldn’t ever become comfortable with that.